Many people in the evidence-based fitness world are aware that commercially accessible body composition measurement devices have some major shortcomings, and that they often provide body-fat estimates with unacceptably large errors. When I say “unacceptable,” I mean the errors are so large that the body-fat estimates become, for all intents and purposes, unusable for the vast majority of practical applications. For example, a person might get their body-fat tested via BodPod or DXA and understand that a reading of “15% body-fat” could very realistically represent a “true” body-fat level of (for instance) 12% or 18% – in other words, the device confirmed a range of plausible values that was as large (or larger) than the range they could have estimated without any measurement at all.

Generally speaking, there is widespread acceptance that these common measurement devices have poor “validity,” which describes how close an estimate is to the true value. However, I’ve noticed a common assumption among many fitness enthusiasts, including people who generally understand that body-fat assessments might not be perfectly valid: people seem to assume that these very same devices have high “reliability,” which describes the repeatability of an estimate. Along these lines, many people seem to believe that even the shoddiest and least valid body composition devices can be used to effectively track changes over time. The expectation is that a given device might typically overestimate their body-fat by (for example) 4 percentage points, but it would make the same overestimation on a consistent basis. So, the device might say they’re at 20% body-fat when they’re really at 16% body-fat, but if they drop their body-fat percentage from 16% to 10%, the device will reflect that change by showing a drop from 20% to 14%. But is this true? Do the empirical data actually support this assumption?

When I was a graduate student, I ran a small pilot study that had a profound impact on the way I viewed body composition assessment. I never got around to publishing the study as a full paper, but I did present some of the data in abstract form (2). The project involved doing a huge battery of body composition assessments for individuals engaged in a weight loss program. At baseline, body composition was assessed with several different measurement devices, including air displacement plethysmography (BodPod), DXA, bioelectrical impedance spectroscopy, and A-mode ultrasound. Participants were also encouraged to schedule a follow-up visit to retest these measurements after losing at least 4.5kg of body mass. In running these assessments, the first observation that caught my attention was the eye-opening magnitude of discrepancies between different devices. I knew the research well enough to anticipate differences, but it’s still pretty weird to tell someone that you just “measured their body-fat” twice in the span of ten minutes, and the values differ by 8-10 percentage points.

The second observation that caught my attention was how poorly these devices tracked longitudinal changes in body composition. We followed typical guidelines for pre-visit standardization (that is, we provided guidelines related to eating, drinking, exercising, and consuming alcohol or caffeine within certain proximities to the laboratory visit) and had experience proficiently administering all of the measurements, but these devices performed quite poorly when compared to the most reliable reference method available (in our lab, that was a 4-compartment model using a combination of BodPod, DXA, and bioelectrical impedance spectroscopy). The most informative analysis we did involved the use of Bland-Altman plots, which provide 95% limits of agreement. Without getting into overly technical jargon, these 95% limits of agreement tell us how much error an individual can realistically expect when comparing each device to the high-quality 4-compartment reference method. When it came to tracking changes, each individual device had limits of agreement that were above ±2 body-fat percentage points, and they were generally well above 2.

Of course, our pilot study had some limitations. Most notably, the sample size was small for the longitudinal part of the study (n = 15 pairs of pre-test and post-test measurements), and the study was only open to people who had BMIs in the overweight or obese category at baseline and were intentionally losing weight. A recent study by Tinsley et al (1) is a fantastic piece of complementary research; it has a larger sample size (n = 19), recruited leaner individuals, and investigated individuals who were trying to gain weight rather than lose it. To be clear, I’m not suggesting that one set of study characteristics is more valid or more important than the other; rather, if we want to know how effectively these types of devices track changes in body composition, we’d like to see how they perform across different populations with different body composition characteristics and different directions and magnitudes of weight change.

In all honesty, this Research Spotlight isn’t going to do this study justice. Dr. Tinsley does excellent work, and this is an absolute beast of a paper. They reported body composition estimates (body-fat percentage, fat mass, and fat-free mass) from over a dozen different estimation methods using several different combinations of devices, while also comparing estimates obtained with the use of standardized versus unstandardized pre-visit guidelines. The paper has over 10 tables and figures, and some of the figures have up to 14 individual panels. All of that is to say, this paper reports a tremendous amount of information, and I’ll only be hitting the most practical highlights.

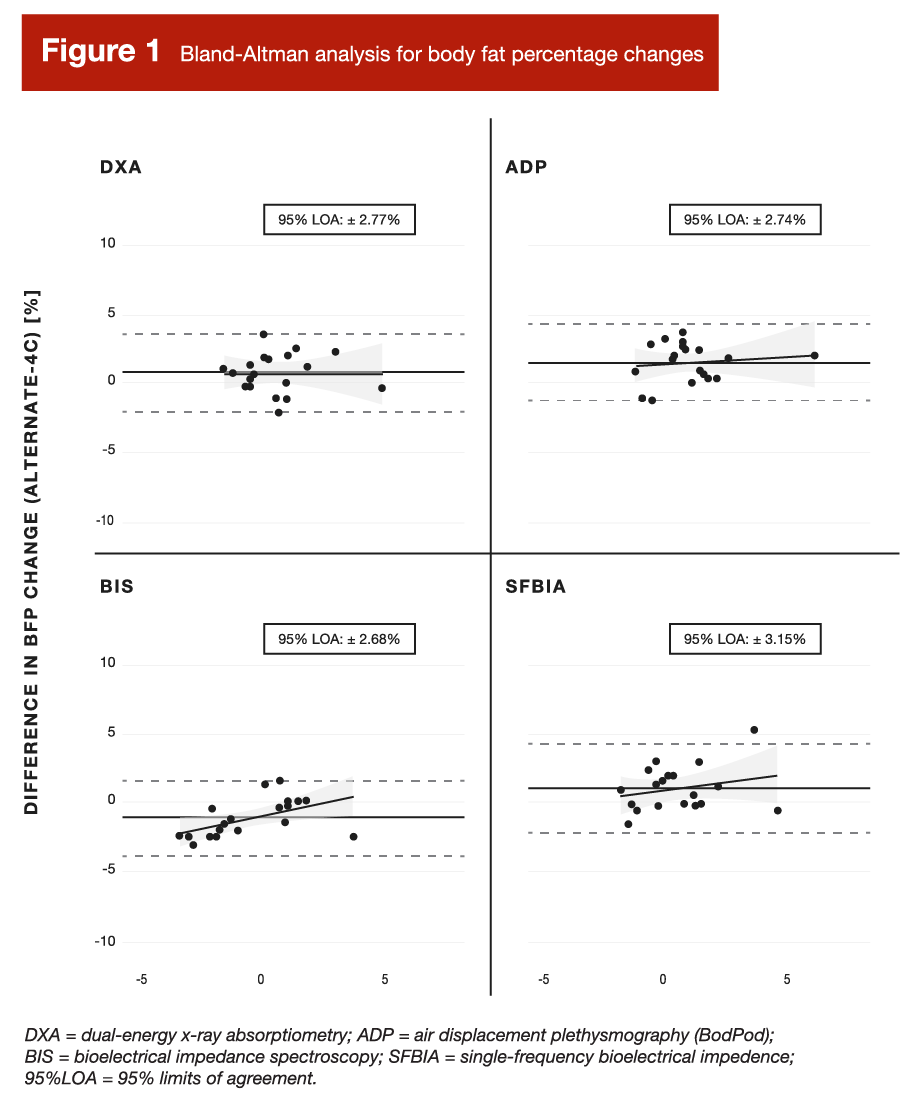

To be concise, most of the commercially accessible devices did pretty poorly when it comes to tracking changes in body composition. Some of the multi-compartment models that combine total body water estimates (from bioelectrical impedance spectroscopy) with other BodPod and DXA measurements did pretty well, but these methods are pretty inaccessible to the vast majority of people (I’ve personally never heard of them being offered at a sport, fitness, or health facility of any kind). Figure 1 presents data for some fairly common measurement methods: DXA, air displacement plethysmography (BodPod), single-frequency bioelectrical impedance (most commonly used in bathroom scales and handheld devices in gyms), and bioelectrical impedance spectroscopy. To be clear, I selected the first three because they’re fairly accessible in gyms and health care facilities; the fourth method (bioelectrical impedance spectroscopy) is rarely accessible, but I included it because it’s used in a decent number of studies that you might come across in future reading. The x-axes in Figure 1 represent average values from the two methods being compared (the listed device and the 4-compartment reference method). For example, if DXA said your body-fat change was +4% and the 4-compartment reference method said your body-fat change was +2%, the average would be +3%. The y-axes represent differences between the listed device and the 4-compartment reference method. Since the reference method is assumed to be the most valid assessment, the y-axes therefore represent the error values of the devices that are being compared to it. So, if DXA said your body-fat change was +4% and the 4-compartment reference method said your body-fat change was +2%, DXA would have overestimated the change by +2%.

Just as we found in our pilot study, the limits of agreement were all above ±2 body-fat percentage points. That’s pretty large when you consider that the participants in this study were completing a short-term (six week) weight gain intervention, where the average weight gained was 4.2kg with a range of 0.5 to 8.5kg. Let’s say you started at 82kg with 15% body-fat, you gained a couple of kilograms over this six week period, and it was clearly a mixture of some fat mass and some fat-free mass. If you hypothetically gained 0.86kg of fat-free mass and 1.14kg of fat mass (representing an increase from 15% to 16% body-fat), these methods (with limits of agreement of around ±3 body-fat percentage points) might say you gained somewhere in the ballpark of -2 to +4 body-fat percentage points. I suspect you would’ve already known that before lying down on the DXA table.

As someone who has competed, coached people, and conducted more body composition tests than anyone would ever want to with most of the consumer-accessible devices on the market, I find it harder and harder to identify situations where measuring your body composition makes sense. Physique sports are scored based on visual assessment, so your eyes tell you more than a BodPod ever could. Other sports are based on sport-specific skills and physical capabilities, and you won’t learn that from a DXA. One might wish to make health-related inferences based on body composition testing, but you’re better off with more clinically relevant biomarker tests that correlate more strongly with specific health outcomes. If you have health concerns related to bone density, then a DXA scan makes sense. If you have a reason to quantify group-level (not individual-level) descriptive data or changes over time (such as cohort of participants in a research study or monitoring program effectiveness among an entire team of athletes), body composition assessment might make sense. For all other applications, you’ll probably do just as well (if not better) with a rough estimate that’s guided by visual assessment, weight monitoring, and changes in the way your clothes fit. If you wanted to go the extra mile, it might be justifiable to track certain circumferences or skinfold thicknesses over time, but converting them to an estimated body-fat percentage value is generally unnecessary and unhelpful.

To clarify, I’m not merely arguing that body composition assessment is typically unnecessary at the individual level. Rather, I’m arguing that there is very real potential for a net negative impact. As a coach, I’ve spent way too many cumulative hours reminding clients not to get too upset about an obviously erroneous but very discouraging body composition assessment, only to later remind them that the next (but also erroneous) estimate is probably a little too good to be true. If we aren’t careful with our interpretation, these types of inaccurate estimates may convince us that effective programs aren’t working, or that our extremely suboptimal programs are working better than they truly are. These scenarios result in unnecessary ups and downs that can unfavorably impact our psychology, while fueling bad decisions based on erroneous values that ultimately serve to hinder our progress. For a concrete example, I once got a DXA reading of ~6% body-fat during contest preparation for a bodybuilding show. Fortunately, I was measuring out of curiosity alone, and knew to take the estimate with a huge grain of salt. In reality, I had 15 more pounds to lose before I was stage-ready (for context, I only cut a total of ~35 pounds for that competition). In other words, the DXA said I was just about done with my cut, but I was closer to the halfway point than the finish line. If I had trusted the machine more than my eyes, there is no question that I would have fallen short of my goals that season.

In summary, body composition measurement devices are very important for group-level research applications. We (collectively) are very fortunate that these devices exist, and we owe a debt of gratitude to the folks whose efforts have made them possible and continue to make them better and better. However, I would personally never act upon the result of an individual-level body composition estimate from these types of commercially accessible devices, and I discourage my clients from using them. Simply put, there are better ways to make inferences about an individual’s physique, performance, or health status. The simplest approach for tracking physique-related changes is to monitor changes in scale weight, while also keeping an eye on changes in clothing fit and visual appearance. If you’d like to kick things up a notch and get more quantitative data, measuring circumferences and/or skinfold thicknesses would be very useful. In the context of assessing adiposity or muscularity, these methods will offer much more utility than any of the measurement devices commonly found in gyms, households, or healthcare facilities.

Note: This article was published in partnership with MASS Research Review. Full versions of Research Spotlight breakdowns are originally published in MASS Research Review. Subscribe to MASS to get a monthly publication with breakdowns of recent exercise and nutrition studies.