Of late, bashing “bro-science” has come into vogue, and I understand why. The fitness industry has very low barriers to entry, and for a long time the sorts of claims made with an utter paucity of evidence by people in the industry more resembled articles of faith than evidence-based statement from practitioners of a legitimate profession.

Such is often still the case, but within the last several years there has been a strong movement toward evidenced-based training and nutrition. I think that, on the whole, the change has been a very positive one. However, I think that it is a reactionary movement by its very nature, and that the pendulum may have swung too far. The old guard used to mock the “pencil-necked nerds in lab coats” who didn’t have “in the trenches” experience – but now the same disdain is often seen from the other side, with evidence-based coaches mocking any claim that can’t be directly substantiated in the scientific literature.

I do think there’s a happy middle, and I think that’s where most people are starting to gravitate. So, what I want to do here is outline the strengths and weaknesses of relying solely on science for strength-related endeavors, and suggest that “bro-science” DOES still have a place – as long as it doesn’t try to claim too much and is content with receding as new evidence comes to light.

Science – what is it even?

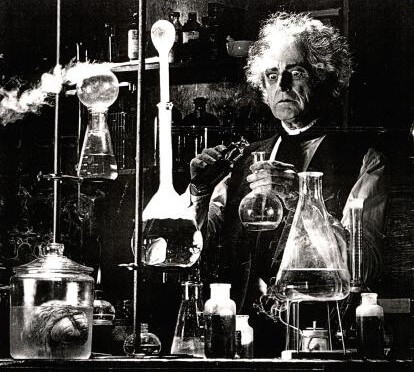

This is an important place to start. Most people have the wrong idea when it comes to science. Science, especially in the field of exercise physiology (and most biological sciences, for that matter), is NOT people in a lab poring over data before exclaiming “Eureka! This is precisely how this works!”

Rather, it’s a systematic way of asking questions, designing experiments to answer those questions with the fewest possible confounding factors, and assigning statistical likelihood to what’s probably happening. It’s very rare that you can claim anything even bordering on certainty using science. More than anything, it’s a reliable means of determining what’s NOT true, so we can get a little closer to approximating what actually IS true.

Science and fitness – strengths

1. Science is the best way humanity has devised so far to answer questions objectively.

Of course there will be some subjectivity in interpretation, but the scientific method is still the gold standard in regards to ruling out personal bias.

This means that if the current literature is backing up what you’re saying, you’re probably pretty close to right, regardless of other peoples’ opinions.

2. Science is pretty good at ruling out things that aren’t true.

When you see a p-value in a scientific study, that tells you how likely it is that the effect seen could be attributed solely to chance. In most exercise science studies, it’s p<0.05. That means that you’re more than 95% sure the experimental intervention ACTUALLY caused an effect, so conversely, there’s less than a 5% chance that the study said something actually changed when, in fact, it didn’t. Again, it’s not a perfect system, but it’s the best system humanity has devised so far to be able to make claims with that high of a degree of confidence.

3. Science is self-correcting

People may have their pet ideas that they cling to in the face of all contrary evidence. However, science does not “believe” anything. Consensus is formed when quality studies support a specific position, but it’s able to change when better evidence becomes available. That doesn’t mean, as some have charged, that science is just another opinion, or that it “flip-flops.” The scientific method isn’t used to find truth – it’s used in the attempt to move closer and closer to the actual truth. Inflexibility in the face of the continuous stream of new evidence would be a weakness, not a strength

Science and fitness – drawbacks

1. People often don’t read science at all, or at least properly (or beyond the abstract)

I won’t name names, but I can think of quite a few prominent fitness people who will cite 40 research articles at the end of anything they write, with the assumption that people will conclude the piece is valid since it has so much scientific support. So, in the minds of the readers, it’s supported by science in spite of the fact that none of them take the time to actually chase the citations to see if those studies do, in fact, support the claims in the piece.

For example, lots of diet articles making huge claims will cite research on diabetic or obese subjects. If you’re a healthy person, any claims supported by those citations probably don’t apply to you (and the author certainly shouldn’t claim scientific support). Ditto for rodent studies.

Then, even if you chase the citations, if you don’t have full-text access, you’re still not sure whether the citation supports the author’s point, because important parts of the study (subject characteristics, research protocol, means of data collection and analysis, etc) often aren’t included in the abstract.

2. Lack of studies on trained athletes

There’s more and more research on trained athletes every day, but most of the studies in the scientific literature are still done on untrained subjects. We all know that there are “noob gains” that happen when you start training. It’s hard to say for sure whether the findings from a study on the general population will translate to an athletic setting (more often than not, they don’t).

3. Many studies look at acute changes, not chronic

This is, to the best of my understanding, because of funding difficulties. It’s a lot cheaper to train people once, draw some blood, and check out what happened on the micro-level than it is to train subjects and gather data for several months to observe whether longer-term adaptations (muscle gain, strength increases, fat loss, improved speed, decreased race times, etc.) actually manifest themselves.

The hype about certain exercise protocols acutely increasing growth hormone or testosterone levels are a case in point here. These acute changes don’t necessarily mean long-term increases in lean mass. Ditto for studies showing an acute increase in markers of protein synthesis with a particular dietary or training regimen – you can’t extrapolate one session and infer progress on the scale of weeks, month, or years.

4. Many longer term studies use protocols that are only marginally relevant to normal training programs

If you want to know whether higher or lower volume squatting will lead to greater strength gains, the most scientifically rigorous way to go about answering that questions is to constrain the rest of the participants’ lower body training so you’re sure the squatting volume is the determining factor, not some uncontrolled training variable (although, of course, sleep and nutrition may not be controlled for very well either, which is obviously a huge issue). Unless your lower body training consists only of squats, and unless you’re at the same skill level as the study participants, you can’t apply the results directly to your training.

An issue here is that scientific studies are asking specific questions. “When controlling for total volume, will increasing exercise frequency cause greater improvement in strength?” is the type of question science tends to ask. “Will (insert specific program here) make me jacked?” isn’t the type of question science deals with. Results have to be relative to something. If it’s a control group that isn’t exercising at all, then positive results for the training group relative to the controls don’t tell you much. If it’s another training group doing some other protocol with an entirely different mix of variables, then you find out one program may be better than another in a specific context, but you don’t come away with any broader principles that could then be applied to future research – so it wouldn’t be of much interest to the scientific community.

5. We’re individuals, but science is dealing with averages

This is a hugely important point. When evaluating whether something produces significant results, you’re dealing with the averages of a data set, and the standard deviations (how spread out the data are). However, there will usually be outliers.

Let’s say you have a high volume program and a low volume program. The high volume program produced, on average, 30% better results, but one individual did horrible on the high volume program, and one individual on the low volume program saw better progress than anyone in the high volume group. Those values were subsumed by the overall results of their respective groups, and the finding of the study was that the higher volume program was significantly better.

What, then, should you say to those two outliers? Would the former have done even worse on the low volume program, and the latter have done even better on the high volume program? You don’t know. To state a high degree of confidence in any concept, scientifically, you have to deal with averages, not individuals. The best study in the world can’t negate the possibility of individual differences.

This is not to say that scientifically validated concepts can’t be applied to training individuals; but it does mean that you MUST have flexibility, because not every individual will see the same results from the same protocol.

6. Significance and relevance aren’t the same things

Most people automatically think of the day-to-day definition of “significant” – something really important and noteworthy. In research, on the other hand, “significant” means that you’re 95%+ sure that there is actually a difference – but that doesn’t necessarily mean the difference really matters.

If you had groups of 1000 people and put them on two different weight loss protocols, and one group lost 30 pounds on average while the other lost 31, that may very well meet the criteria for a “significant” finding. But that doesn’t mean that it makes any real-world difference.

Don’t be misled when people are claiming a “significant” finding. Figure out how large the difference actually is, and ask yourself whether that difference actually matters.

So, when the rubber meets the road…

Read scientific studies to derive principles. They should be more of a compass than a road map: they point you in the right general direction; they DON’T give you step-by-step instructions.

It makes me think of the Greg Glassman quote about how no good training program was ever based on science. Scientists aren’t sitting around tinkering in a lab until one day they’ll exclaim, “THIS is the perfect way to train! We’ve figured it out!” They’re figuring out how things work, and why certain things work so in-the-trenches coaches can know more about the principles and mechanisms in play when writing programming and coaching athletes.

Since there won’t be studies telling you exactly how to train or coach athletes, it’s up to you to gather, record, and analyze data on yourself and your athletes to know whether your programs are working. You may never write up your results and get them published, but you can apply the same process to your day-to-day practice – remember, you won’t find the “truth,” but you’ll find things that don’t work and things that work a little better, and using that data you can constantly evolve and improve. But remember, to know what’s having an effect, only adjust one variable at a time. Completely starting over from scratch all the time doesn’t give you much of a base to work off of.

Where bro-science fits in

Science doesn’t have all the answers yet. There’s still a gulf between common practices in the gym and what’s been studied on highly trained subjects in the lab. Often, bro-science is running ahead of science figuring out via trial and error WHAT works, before science figures out WHY it works and offers evidence suggesting how it could be further improved. I think that, when it’s at its best, bro-science is sort of like an R&D department.

However, there should be limits. When bro-science contradicts something that’s been well supported by good research, real science should trump it.

When bro-science is claiming efficacy from a certain training practice, but it is found to be ineffective when studied in a controlled fashion, bro-science should step down and that practice should be discarded (at least within the context that it was found to be ineffective).

When bro-science is making claims, they should be provisional – they should be humble. “I think this works,” or “lots of lifters have had success doing things this way,” are alright statements. “This is what works, I’m sure of it, and no pencil-necked geek in a labcoat can say otherwise,” is not acceptable.

Wrapping it all up

Science and bro-science can, and should, work together. Science, as a fairly slow, methodical process simply cannot ask as many questions, experiment as much, or try as many permutations of variables as bro-science can. However, anything claimed by bro-science should be posited with a strong note of uncertainty since no claims it makes have been tested in as rigorous of a manner as true scientific claims have been.

More than anything, an understanding of how science works – including its strengths, its limitations, and its potential for directing day-to-day practice – should guide and inform every fitness professional and serious athlete.